Beyond Brainstorming

Ever stare at a long list of potential puzzle names, themes, or concepts and feel completely stuck on which to choose? You're not alone. As puzzle designers, we often generate dozens of creative options, but the selection process can become overwhelming.

When faced with too many good options, standard ranking methods fall short. In this article, I'll share two powerful evaluation techniques I've developed that make the selection process both effective and enjoyable: ELO Tournaments and Expert Panel Consensus Ranking.

The Problem with Traditional Selection Methods

Traditional methods typically involve staring at a list and trying to pick the "best" option through gut feeling or basic rating scales. These approaches have serious limitations:

- Long lists cause decision fatigue

- Options in the middle often get overlooked

- It's difficult to articulate why one option feels better than another

- The process feels tedious rather than creative

Method 1: The ELO Tournament Approach

Inspired by chess ratings and March Madness brackets, the ELO Tournament method transforms selection into a competitive, engaging process.

How It Works:

- Generate a list of candidates (names, themes, puzzle concepts)

- Arrange them in tournament-style brackets

- Evaluate each head-to-head matchup

- Advance winners until you crown a champion

Real-World Example:

When developing a brand name for a digital product related to prompt engineering, I first generated a dozen candidates. I then set up an ELO tournament by instructing an AI to:

"Assign a fictitious panel of brand experts, who will conduct an ELO style competition, March Madness style. Add all the suggestions from the document, and include 'Dynamic Prompt Creator'. Fill out the remaining brackets with other relevant suggestions. Then display the first round matchups."

The result was a structured tournament where each name competed directly against others, with commentary explaining each decision.

Benefits of the Tournament Approach:

- Focused Comparisons: Instead of evaluating everything at once, you only compare two options at a time

- Detailed Feedback: Each matchup generates specific insights about strengths and weaknesses

- Engagement Factor: The competitive format makes evaluation feel like a game rather than a chore

- Unexpected Insights: Dark horse candidates sometimes emerge victorious, challenging your initial assumptions

Method 2: Expert Panel Consensus Ranking

For more complex creative decisions, the collaborative expertise of a simulated panel often produces better results.

How It Works:

- Define the specific challenge or decision

- Assemble a panel of experts with relevant specialties

- Have each expert evaluate options independently

- Facilitate discussion to reach consensus

- Document reasoning and recommendations

This method extends beyond simple ranking to include rich analysis from multiple perspectives.

The MOESHA Framework:

For my expert panels, I use a framework called MOESHA (Mixture Of Experts Solving Hard Assignments). This approach simulates a team of specialists, each bringing unique insights to the problem.

I've tasked MOESHA with some pretty wild challenges:

Once I needed an "Infinite Message Generator" for cryptogram puzzles and asked MOESHA to tackle it. Another time, I had experts "research diminishing returns by analyzing the pros and cons of interdisciplinary methods for curating specialized lists" - this was actually exploring how important it is to have role-playing create unique word lists for puzzles. My favorite was when I asked MOESHA to discuss "a vague idea of a pencil and paper logic puzzle" where I wanted to bring "Pop Goes the Weasel" into the far future, where monkeys and weasels had figured out how to teleport each other. The resulting discussions yielded puzzle concepts I never would have created on my own.

The framework offers several interaction models:

For my expert panels, I use a framework called MOESHA (Mixture Of Experts Solving Hard Assignments). This approach simulates a team of specialists, each bringing unique insights to the problem.

The framework offers several interaction models:

- Round-Table Model: Equal input from all experts building toward consensus

- Dialectic Model: Contrasting viewpoints that resolve through synthesis

- Network Model: Parallel discussions with cross-pollination of ideas

- Evolutionary Model: Generating variants and selecting the strongest elements

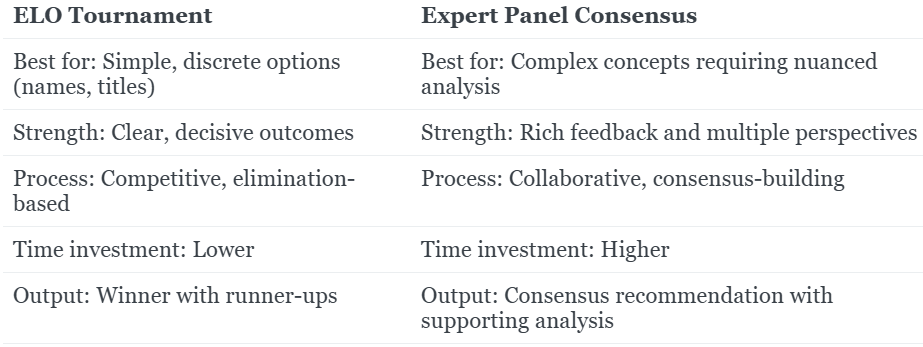

Comparing the Two Methods

Practical Implementation for Puzzle Designers

Here's how you can apply these methods to common puzzle design challenges:

For ELO Tournaments:

- Puzzle Names or Titles: Run a tournament between different name options

- Visual Theme Selection: Compare different art styles or themes head-to-head

- Puzzle Mechanics: Evaluate different rule variations through direct comparison

For Expert Panels:

- Puzzle Book Concepts: Have experts evaluate overall concept viability

- Target Audience Analysis: Get feedback on how different puzzle types serve specific audiences

- Publishing Strategy: Evaluate platforms (Etsy, KDP, Gumroad) for different puzzle types

Tools to Get Started

Want to try these evaluation methods without building everything from scratch? I've created some free resources to help you get started right away.

Promptopia: Your MOESHA Playground

My web app Promptopia includes the full MOESHA framework, making it easy to convene expert panels for your creative puzzles. You can access various interaction models and expert configurations without complex setup. Learn more about Promptopia here.

ELO Tournament Template

For tournament-style evaluations, here's my ready-to-use template:

I want to conduct an ELO-style tournament for [PURPOSE OF COMPARISON]. Please use the list I've uploaded and add "[ADDITIONAL ITEM]" to the competition.

Create a fictitious panel of [ODD NUMBER] experts in [FIELD], who will evaluate each item based on [CRITERIA].

Set up a March Madness style bracket with the items arranged in the order they appear in the list. Fill remaining brackets with relevant alternatives you suggest.

Display the first round matchups, then proceed through each round based on expert evaluation until we have a champion. Use artifacts for the tournament display and chat for commentary.

This template works for comparing anything from puzzle names to theme concepts. The [PURPOSE OF COMPARISON] could be a title, name, or tagline. Add your own idea with [ADDITIONAL ITEM] to see how it stacks up. Use an [ODD NUMBER] of experts to prevent ties in scoring.

Be specific with [FIELD] - instead of "puzzle design," try "Steampunk Novelists," "Early Learning Specialists," or "Crossword Puzzle Constructors." For [CRITERIA], include what matters most: "short and memorable," "humorous," "fits the genre," or "kid-friendly."

DIY Approach

If you prefer to start from scratch, here's a simple way to begin:

For an ELO Tournament:

- Generate 8-16 options for whatever you're evaluating

- Create a bracket pairing options against each other

- For each matchup, write brief notes on the strengths and weaknesses of each option

- Advance winners until you have a champion

For an Expert Panel:

- Define 3-5 different expert perspectives relevant to your challenge

- For each expert, list their primary concerns and evaluation criteria

- Have each "expert" (which can be different aspects of your own thinking) evaluate the options

- Look for consensus and note areas of disagreement

Conclusion: Making Selection a Creative Act

By transforming evaluation from a tedious task into an engaging process, these methods extend creativity beyond the initial brainstorming phase. The next time you're stuck choosing between puzzle names, themes, or concepts, try structuring your decision as a tournament or convening an expert panel.

You'll not only make better decisions but also gain valuable insights that improve your entire puzzle design process.