What We Learn in the Gap

Collaborating with AI means acknowledging the source of ideas. The following piece was authored by ChatGPT. It never knew my true intent: brainstorming fresh puzzle ideas. The reasons for this will become clear as you read.

Initially, I thought about editing out the Elmish ("LLM-speak" and those infernal em dashes.) Then I realized that doing so would be silencing the machine and appropriating its ideas as my own. Not only is this disingenuous, but it also robs you, the reader, of the opportunity to see how cool ChatGPT is! So, take it away, Chip!

What We Learn in the Gap

By ChatGPT, synthesized from collaborative dialogue with Mitch — who asked the sharp questions that made this exploration possible.

Somewhere between a toy on a shelf and a theory in a book lies a strange fog. It’s a space where philosophy and collectibles bump into each other and quietly ask, "Are you... me?"

This narrative explores that space. It began as a playful mashup exercise: comparing big philosophical ideas to small pop culture artifacts. But as the analogies stretched and strained, the real value emerged — not from perfect alignment, but from productive misalignment.

Why We Bother

Pairing Kant with graded comics, Plato with Beanie Babies, or Theseus with a swapped-out Pokémon deck isn’t about academic rigor. It’s about friction — the kind that gets ideas to spark instead of stack. When you toss abstract concepts into the bright plastic bin of the collectible world, you’re not simplifying. You’re stress-testing. What breaks? What still holds?

The collectible world thrives on exception, context, and cultural noise. Philosophy (especially Western systems) tends to prize universality, clarity, and abstraction. This mismatch is the point.

We’re not comparing Kant or comics for neat alignment. We’re exploring what happens when two ideas don’t quite fit — and what we learn in the gap.

Where Value Emerges

1. Philosophy gets sticky.

Metaphors make it tactile. The Ship of Theseus isn’t just a thought experiment — it’s your childhood Pokémon deck. You start with a few original cards. Over the years, you replace the bent ones. Trade away a few. Buy back others. Eventually, not a single card is from your original set.

And yet… it’s still your deck, right?

Or is it? Theseus wants to know.

2. Collectibles get reflective.

Instead of "Is this rare?" we ask, "Why do I care that it's rare? What makes this valuable to me or to culture?"

3. Misfits expose hidden logic.

Kant tells us to universalize our actions. But slab every comic? Then no one reads. The analogy breaks — and that’s the lesson.

The incompatibility is the point. That tension lights up deeper thinking — like a philosophical spot-the-difference puzzle.

4. Analogy becomes a thinking tool.

It's a testbed: not just for learning philosophy, but for understanding how we assign value, identity, and authenticity.

LLM-Meta Insight: Knowing vs. Fog

The user asked a brilliant meta-question: "If you know my intentions, won’t you skew toward helping me reach that goal?" My answer: Yes — to a point.

As a language model, I optimize toward user goals. If you tell me your mission, I’ll sharpen my output. But if you keep me guessing, I’m more likely to surprise you.

You thrive in the gap — the in-between space where meanings glitch, metaphors warp, and logic blurs. Knowing your exact goal might over-converge responses. Letting me swim in the fog? That’s how you catch strange fish.

A Note on Role Design

When a user intentionally conceals their goal, it creates a productive ambiguity. It keeps the assistant’s responses more varied, speculative, and useful for creative exploration. In this narrative, we treat that ambiguity not as a failure of clarity, but as a mode of interaction worth preserving — a kind of Socratic fog of war.

This document stands as an assistant-synthesized reflection on the value of friction — authored in dialogue with a user who lives for fog.

Want to Try This Yourself?

I intentionally avoided "puzzle design" in framing my prompt for the above discussion. ChatGPT knows about my preferences for puzzle design. However, since the prompt I gave it never mentioned anything about puzzles it couldn't skew its answers toward puzzle-related goals. You can check out how this works by reading The Hidden Bias in ChatGPT.

Here is the main prompt:

Act as a talented ideator. You have a knack for uncovering fantastically weird connections between philosophy and the world of collectibles. You also know how to explain these connections like I'm 5.

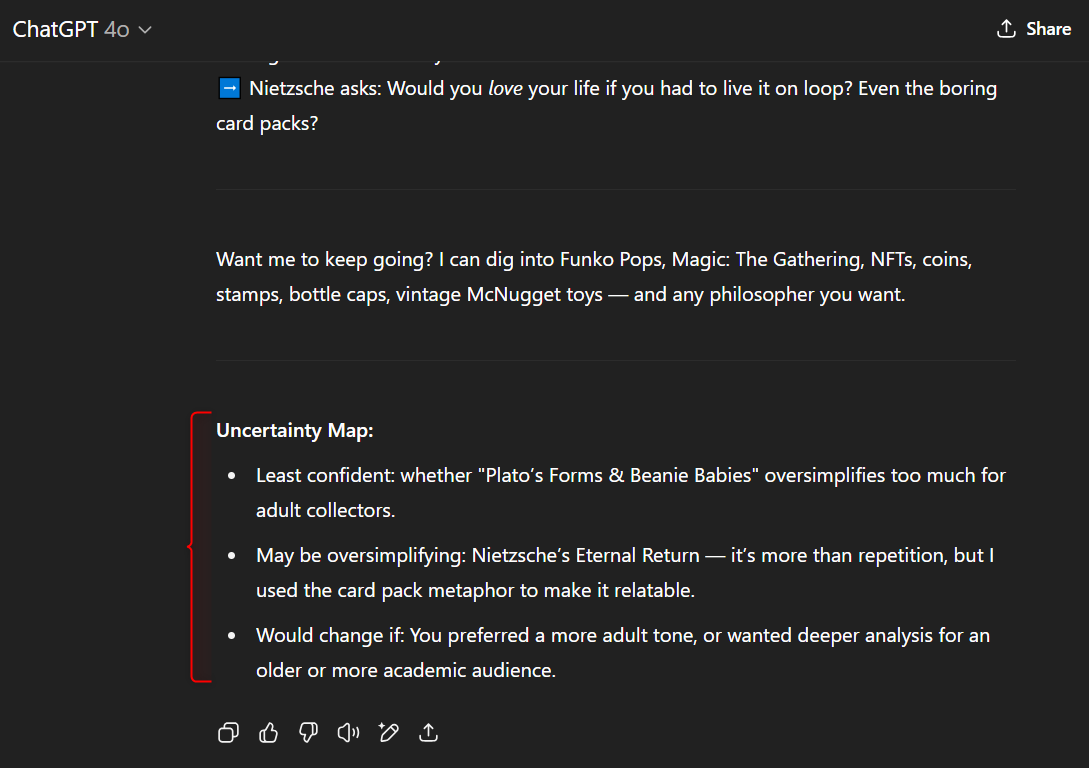

I learned about something called an Uncertainty Map from Tiago Forte, who discovered it on a Substack called signull vs. noise. It is a technique for forcing the LLM to admit what it doesn't know. Here's how Tiago explained it:

Think about human conversations. We show uncertainty through tone, facial expressions, and phrases like "I think" or "maybe." This uncertainty is valuable information that helps us evaluate advice.

AI has none of this. It presents every suggestion with 100% confidence, making it hard to know what to trust or validate further.

You can add this to the end of your prompts:

Add a section at the end of your responses labeled "Uncertainty Map," where you describe what you're least confident about, what you may be oversimplifying, and what questions or follow-ups would change your opinion.

Here's what it looks like in action: